XOHOLO, point cloud cinema at RML CineChamber

My Role

Creative Direction, Technical Direction, 3D Design

Product Type

Art Installation

XOHOLO is an immersive audiovisual art installation created for CineChamber, a surround-screen projection environment conceived by Recombinant Media Labs. A modular cube more akin to Stonehenge than a movie theater, CineChamber has exhibited internationally at film festivals and art events for over a decade. In 2021, Gray Area in San Francisco partnered with RML to host the system on-site, inviting a select group of artists to prototype new forms of spatial cinema.

PartNers

Lifecycle

Residency Period April–October 2021

Key Skills & Tools

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

Credits

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

XOHOLO, point cloud cinema at RML CineChamber

My Role

Creative Direction, Technical Direction, 3D Design

Product Type

Art Installation

XOHOLO is an immersive audiovisual art installation created for CineChamber, a surround-screen projection environment conceived by Recombinant Media Labs. A modular cube more akin to Stonehenge than a movie theater, CineChamber has exhibited internationally at film festivals and art events for over a decade. In 2021, Gray Area in San Francisco partnered with RML to host the system on-site, inviting a select group of artists to prototype new forms of spatial cinema.

PartNers

Lifecycle

Residency Period April–October 2021

Key Skills & Tools

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

Credits

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

XOHOLO, point cloud cinema at RML CineChamber

My Role

Creative Direction, Technical Direction, 3D Design

Product Type

Art Installation

XOHOLO is an immersive audiovisual art installation created for CineChamber, a surround-screen projection environment conceived by Recombinant Media Labs. A modular cube more akin to Stonehenge than a movie theater, CineChamber has exhibited internationally at film festivals and art events for over a decade. In 2021, Gray Area in San Francisco partnered with RML to host the system on-site, inviting a select group of artists to prototype new forms of spatial cinema.

PartNers

Lifecycle

Residency Period April–October 2021

Key Skills & Tools

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

Credits

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

XOHOLO, point cloud cinema at RML CineChamber

My Role

Creative Direction, Technical Direction, 3D Design

Product Type

Art Installation

XOHOLO is an immersive audiovisual art installation created for CineChamber, a surround-screen projection environment conceived by Recombinant Media Labs. A modular cube more akin to Stonehenge than a movie theater, CineChamber has exhibited internationally at film festivals and art events for over a decade. In 2021, Gray Area in San Francisco partnered with RML to host the system on-site, inviting a select group of artists to prototype new forms of spatial cinema.

PartNers

Lifecycle

Residency Period April–October 2021

Key Skills & Tools

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

Credits

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

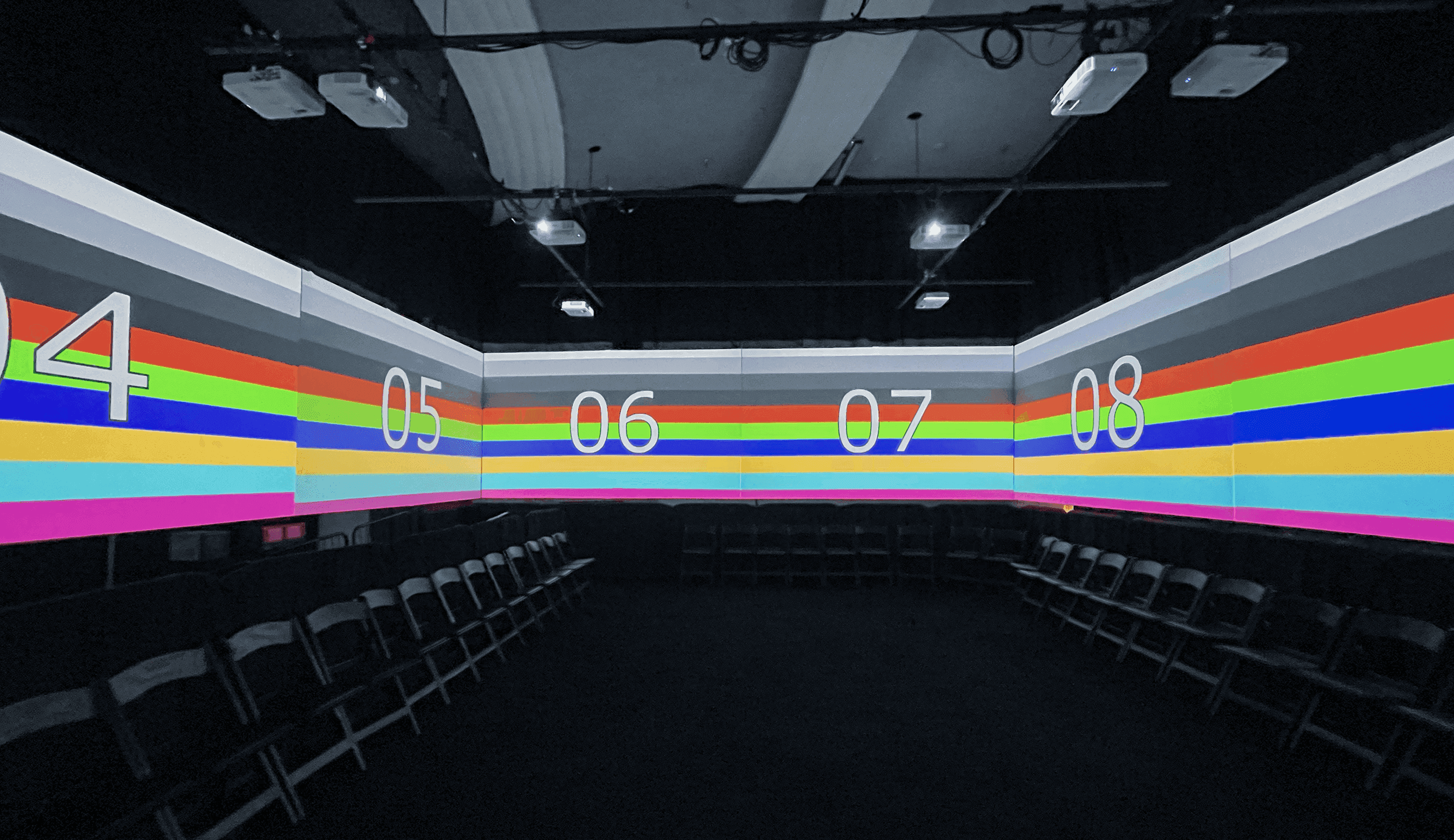

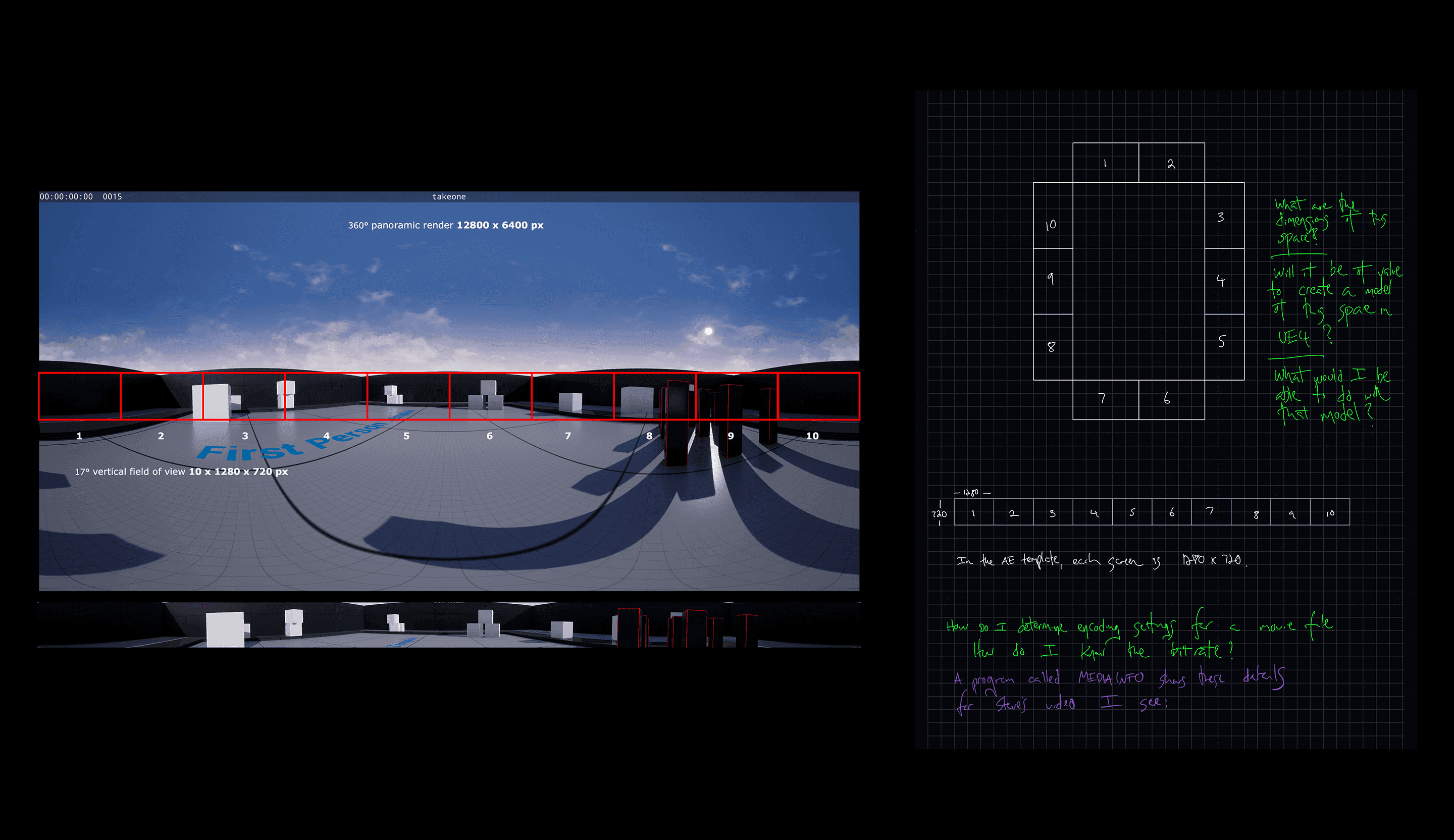

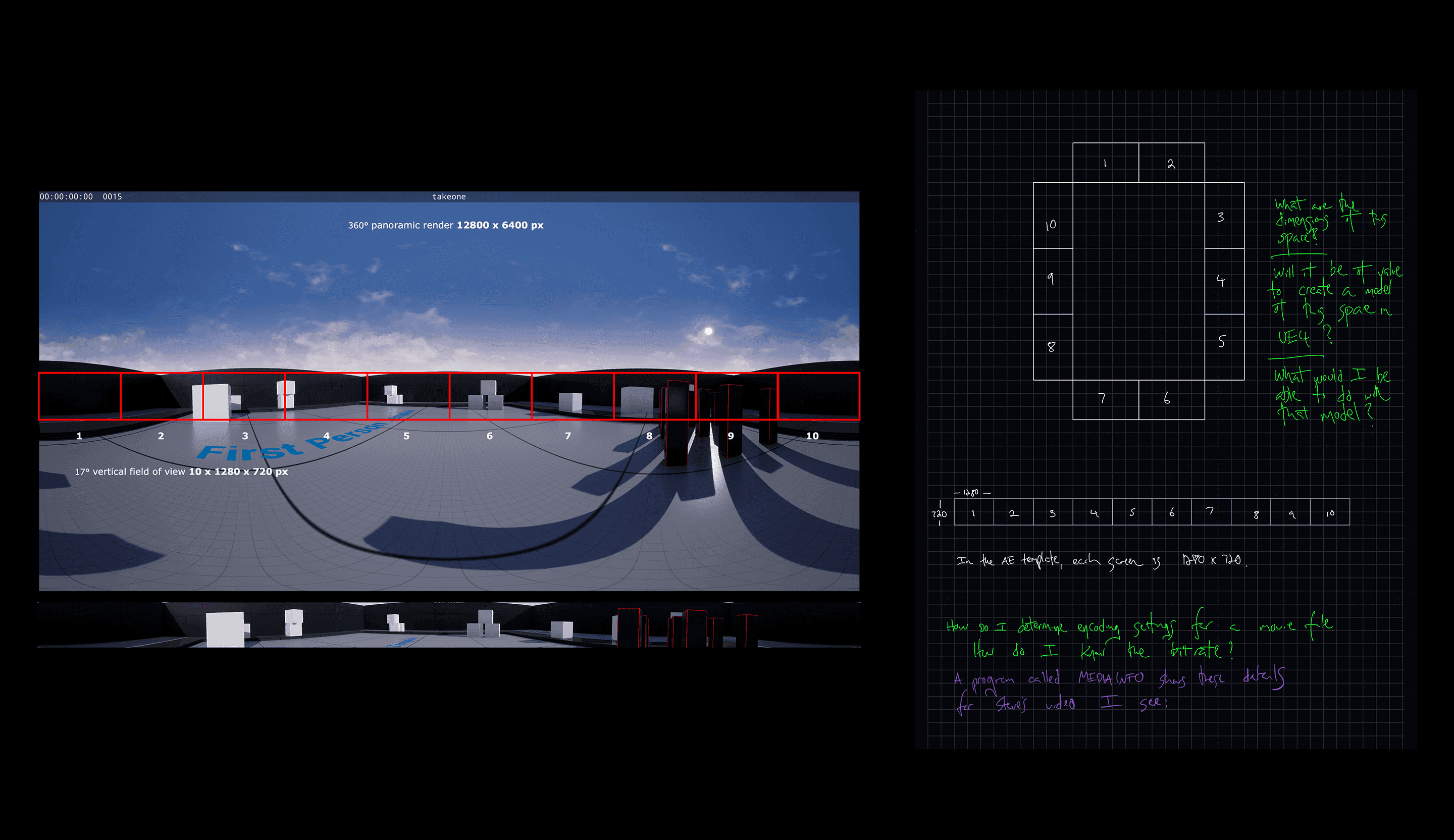

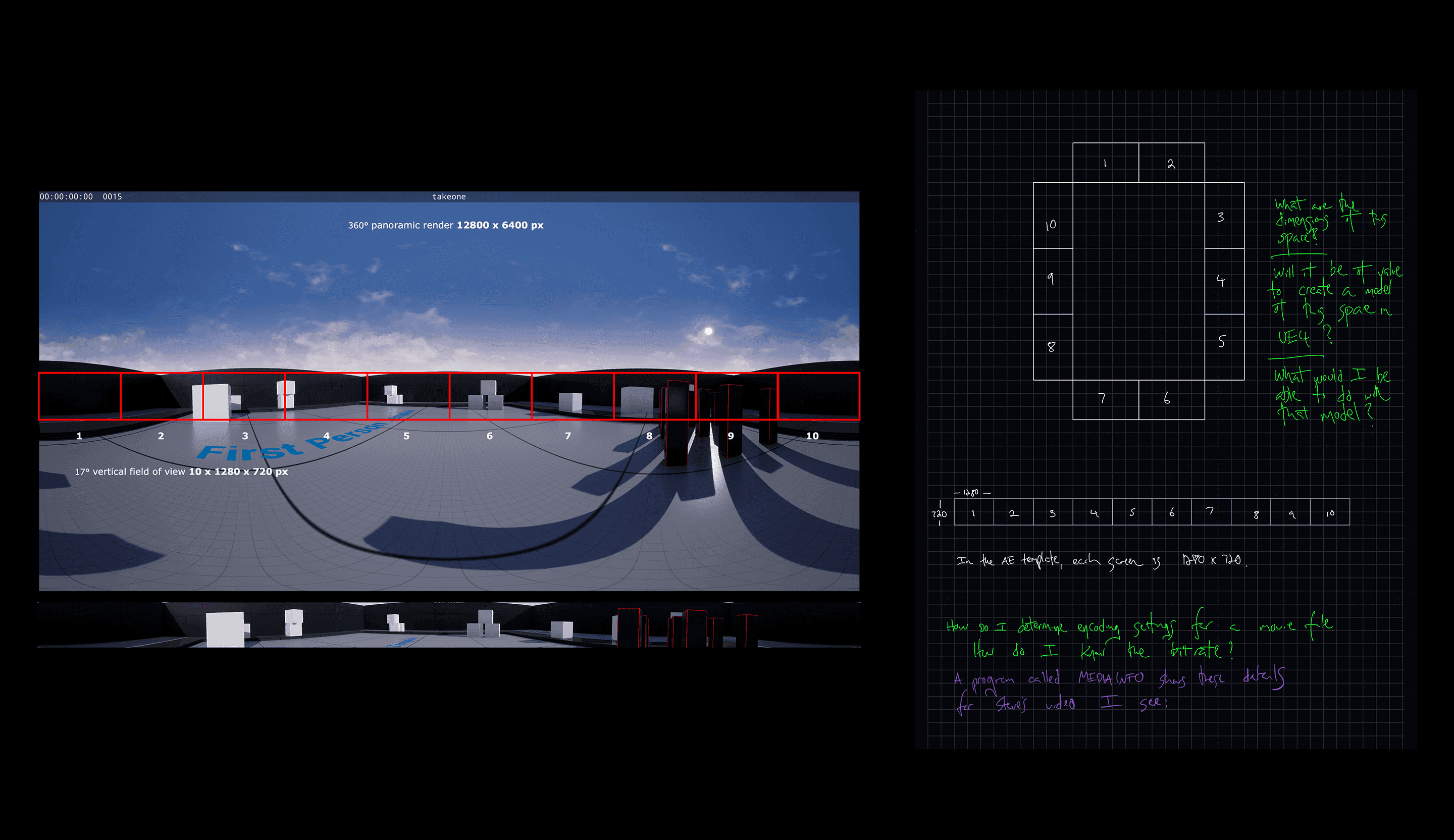

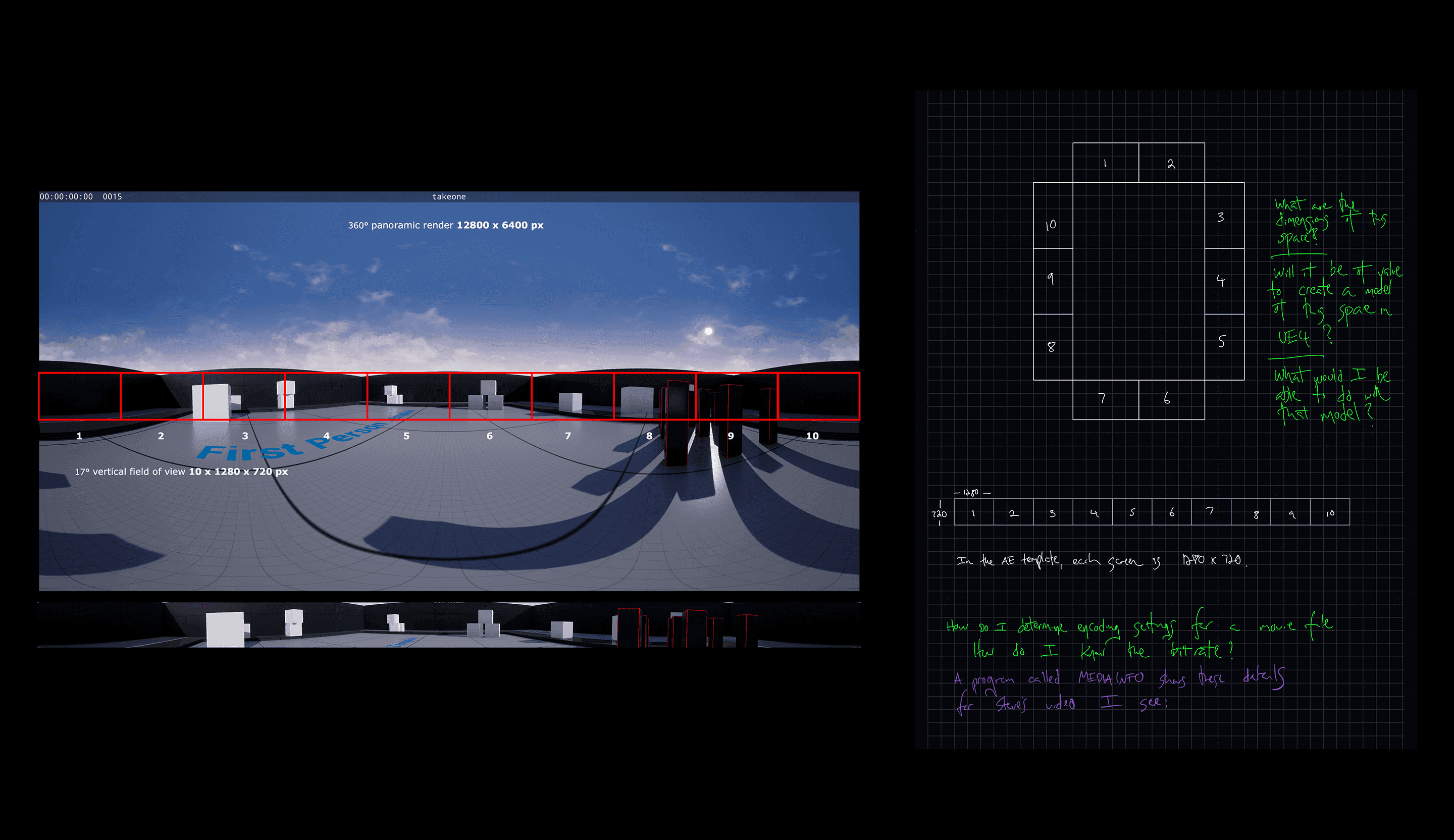

Rendering the space

One environment, ten perspectives

Rendering the space

One environment, ten perspectives

Rendering the space

One environment, ten perspectives

Rendering the space

One environment, ten perspectives

The chamber’s cubic form and compressed vertical view created a hard constraint for asset production: every frame had to maintain correct perspective while filling all 10 vertical screens without distortion. Using a custom camera blueprint in Unreal Engine, I generated panoramic renders. From each frame, I cropped a vertical slice to match the display height, then distributed it in TouchDesigner across ten projectors, ensuring seamless 360° coverage.

The chamber’s cubic form and compressed vertical view created a hard constraint for asset production: every frame had to maintain correct perspective while filling all 10 vertical screens without distortion. Using a custom camera blueprint in Unreal Engine, I generated panoramic renders. From each frame, I cropped a vertical slice to match the display height, then distributed it in TouchDesigner across ten projectors, ensuring seamless 360° coverage.

The chamber’s cubic form and compressed vertical view created a hard constraint for asset production: every frame had to maintain correct perspective while filling all 10 vertical screens without distortion. Using a custom camera blueprint in Unreal Engine, I generated panoramic renders. From each frame, I cropped a vertical slice to match the display height, then distributed it in TouchDesigner across ten projectors, ensuring seamless 360° coverage.

The chamber’s cubic form and compressed vertical view created a hard constraint for asset production: every frame had to maintain correct perspective while filling all 10 vertical screens without distortion. Using a custom camera blueprint in Unreal Engine, I generated panoramic renders. From each frame, I cropped a vertical slice to match the display height, then distributed it in TouchDesigner across ten projectors, ensuring seamless 360° coverage.

Before immersion.

Projection calibration in the CineChamber array at Gray Area, San Francisco, aligning ten synchronized projectors into seamless 360° coverage.

Photograph Gary Boodhoo, Skinjester Studio

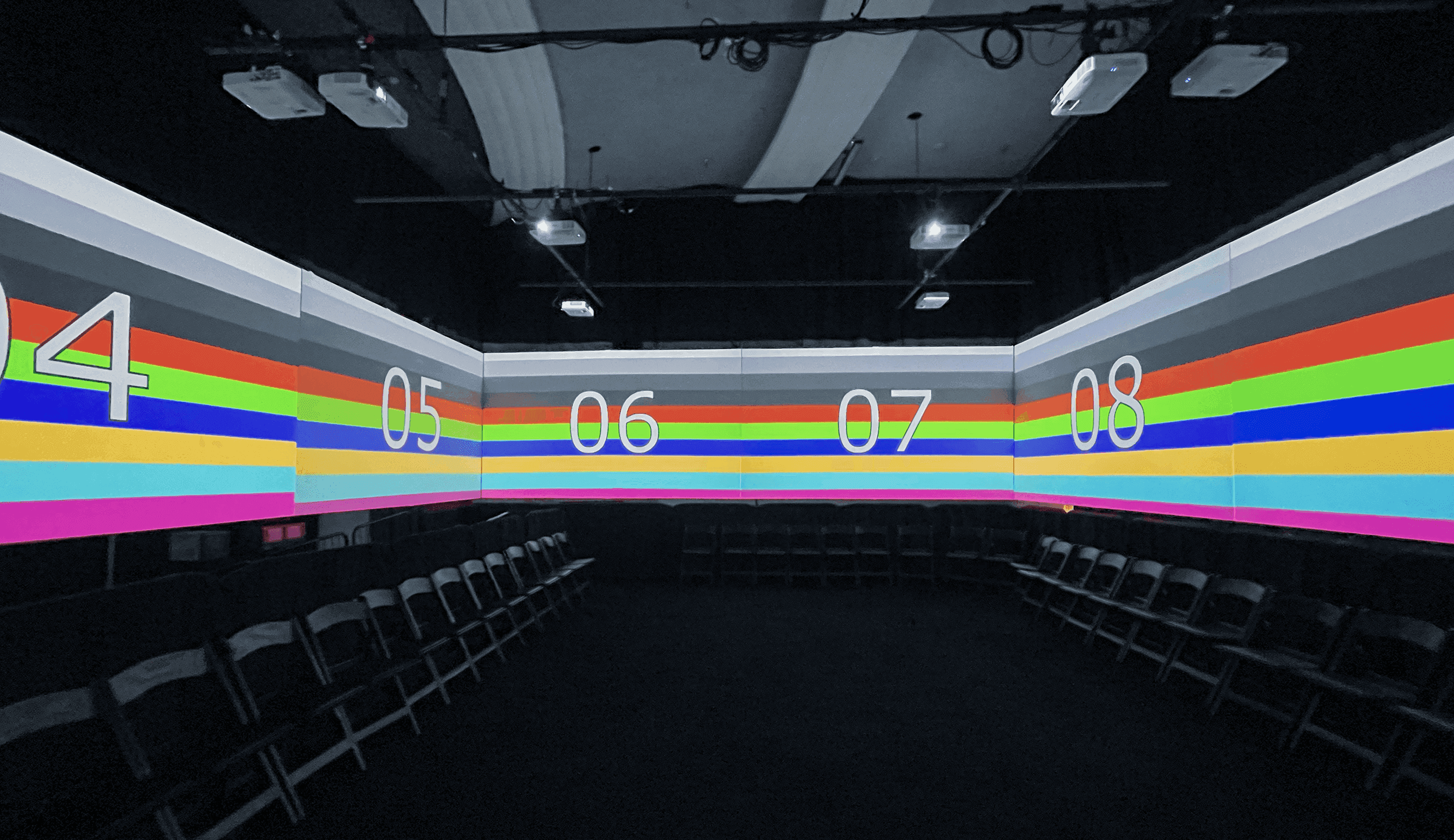

Before immersion.

Projection calibration in the CineChamber array at Gray Area, San Francisco, aligning ten synchronized projectors into seamless 360° coverage.

Photograph Gary Boodhoo, Skinjester Studio

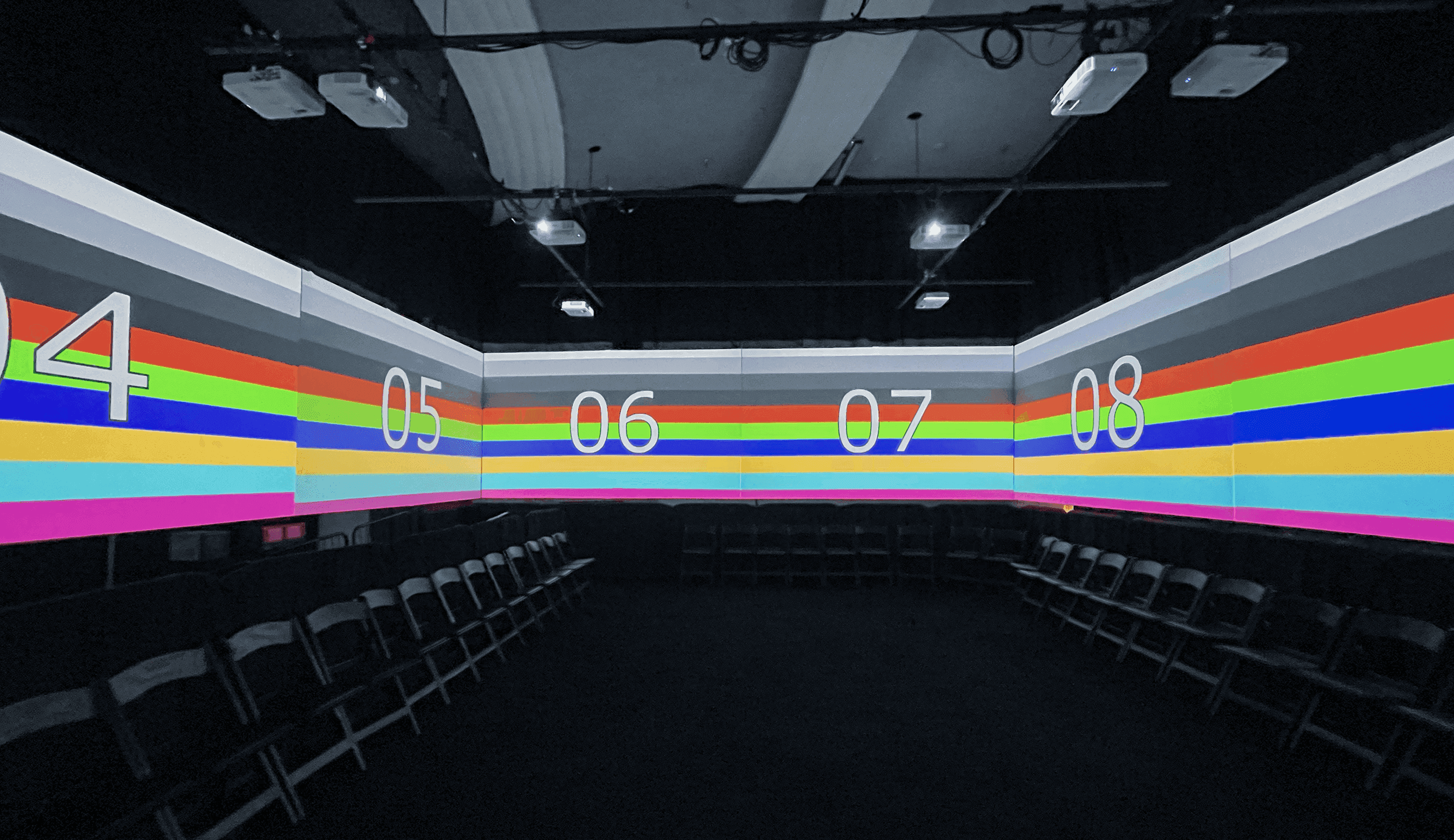

Before immersion.

Projection calibration in the CineChamber array at Gray Area, San Francisco, aligning ten synchronized projectors into seamless 360° coverage.

Photograph Gary Boodhoo, Skinjester Studio

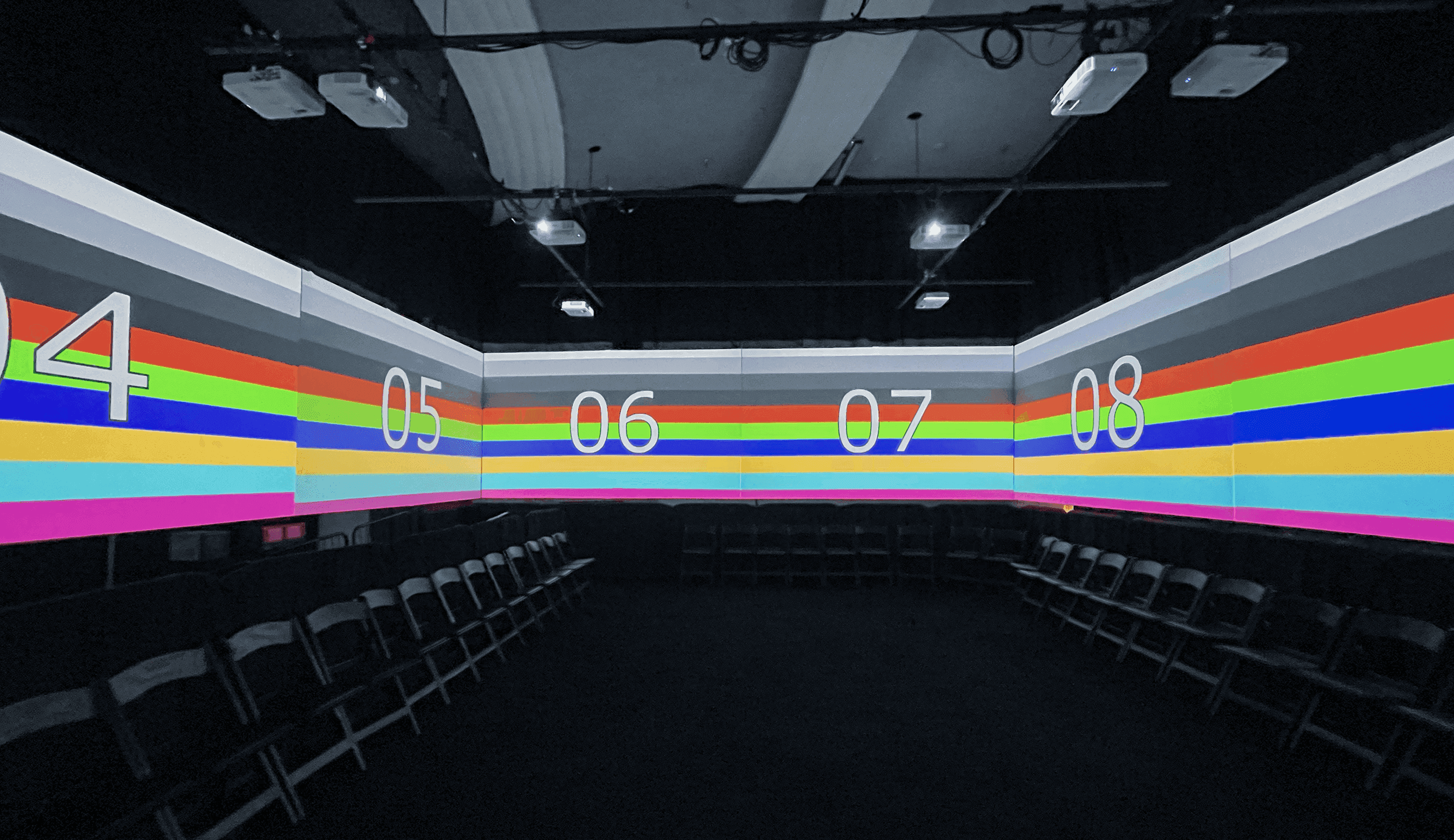

Before immersion.

Projection calibration in the CineChamber array at Gray Area, San Francisco, aligning ten synchronized projectors into seamless 360° coverage.

Photograph Gary Boodhoo, Skinjester Studio

Viewport mapping, production notes

A 360° Unreal panorama segmented into ten vertical viewports and registered to the physical layout, aligning virtual camera geometry with the room’s projection system.

3D Design Gary Boodhoo, Skinjester Studio

Viewport mapping, production notes

A 360° Unreal panorama segmented into ten vertical viewports and registered to the physical layout, aligning virtual camera geometry with the room’s projection system.

3D Design Gary Boodhoo, Skinjester Studio

Viewport mapping, production notes

A 360° Unreal panorama segmented into ten vertical viewports and registered to the physical layout, aligning virtual camera geometry with the room’s projection system.

3D Design Gary Boodhoo, Skinjester Studio

Viewport mapping, production notes

A 360° Unreal panorama segmented into ten vertical viewports and registered to the physical layout, aligning virtual camera geometry with the room’s projection system.

3D Design Gary Boodhoo, Skinjester Studio

Aesthetics

I thought spectacle was enough. It wasn’t.

Aesthetics

I thought spectacle was enough. It wasn’t.

Aesthetics

I thought spectacle was enough. It wasn’t.

Aesthetics

I thought spectacle was enough. It wasn’t.

Early content tests with video game–style environments looked good but lacked heart. While the scenes were visually rich, they didn’t evoke a personal connection. Even so, these initial attempts validated the technical direction. The rendering pipeline worked as intended, which allowed a shift toward a more grounded approach.

Early content tests with video game–style environments looked good but lacked heart. While the scenes were visually rich, they didn’t evoke a personal connection. Even so, these initial attempts validated the technical direction. The rendering pipeline worked as intended, which allowed a shift toward a more grounded approach.

Early content tests with video game–style environments looked good but lacked heart. While the scenes were visually rich, they didn’t evoke a personal connection. Even so, these initial attempts validated the technical direction. The rendering pipeline worked as intended, which allowed a shift toward a more grounded approach.

Early content tests with video game–style environments looked good but lacked heart. While the scenes were visually rich, they didn’t evoke a personal connection. Even so, these initial attempts validated the technical direction. The rendering pipeline worked as intended, which allowed a shift toward a more grounded approach.

Projection test

I used a 3rd party scene from the Unreal Marketplace to examine how atmosphere and motion translated to the venue

Projection Mapping Gary Boodhoo, Steve Pi

Projection test

I used a 3rd party scene from the Unreal Marketplace to examine how atmosphere and motion translated to the venue

Projection Mapping Gary Boodhoo, Steve Pi

Projection test

I used a 3rd party scene from the Unreal Marketplace to examine how atmosphere and motion translated to the venue

Projection Mapping Gary Boodhoo, Steve Pi

Projection test

I used a 3rd party scene from the Unreal Marketplace to examine how atmosphere and motion translated to the venue

Projection Mapping Gary Boodhoo, Steve Pi

Low stakes LiDAR

Low-res scans, high-res presence

Low stakes LiDAR

Low-res scans, high-res presence

Low stakes LiDAR

Low-res scans, high-res presence

Low stakes LiDAR

Low-res scans, high-res presence

Using my iPhone’s LiDAR, I began casually scanning everyday objects and familiar spaces. I experimented with walking scans and layered exposures—capturing motion by moving with the subject or letting them shift during the scan. The results were rough but expressive, more like memory than measurement. Imperfections became a feature, turning scan noise into atmospheric texture.

Using my iPhone’s LiDAR, I began casually scanning everyday objects and familiar spaces. I experimented with walking scans and layered exposures—capturing motion by moving with the subject or letting them shift during the scan. The results were rough but expressive, more like memory than measurement. Imperfections became a feature, turning scan noise into atmospheric texture.

Using my iPhone’s LiDAR, I began casually scanning everyday objects and familiar spaces. I experimented with walking scans and layered exposures—capturing motion by moving with the subject or letting them shift during the scan. The results were rough but expressive, more like memory than measurement. Imperfections became a feature, turning scan noise into atmospheric texture.

Using my iPhone’s LiDAR, I began casually scanning everyday objects and familiar spaces. I experimented with walking scans and layered exposures—capturing motion by moving with the subject or letting them shift during the scan. The results were rough but expressive, more like memory than measurement. Imperfections became a feature, turning scan noise into atmospheric texture.

Scanning While Moving

LiDAR capture of a walking subject appears as overlapping point distributions in space. Slicing the volume reconstructs the motion.

3D Design Gary Boodhoo, Skinjester Studio

Scanning While Moving

LiDAR capture of a walking subject appears as overlapping point distributions in space. Slicing the volume reconstructs the motion.

3D Design Gary Boodhoo, Skinjester Studio

Scanning While Moving

LiDAR capture of a walking subject appears as overlapping point distributions in space. Slicing the volume reconstructs the motion.

3D Design Gary Boodhoo, Skinjester Studio

Scanning While Moving

LiDAR capture of a walking subject appears as overlapping point distributions in space. Slicing the volume reconstructs the motion.

3D Design Gary Boodhoo, Skinjester Studio

Multiple Exposures

The subject shifted pose mid-scan, creating an overlapping point distribution that appears as a duplicate in the capture. This artifact introduced a performative element into what would otherwise be a static point cloud.

3D Design Gary Boodhoo, Skinjester Studio

Multiple Exposures

The subject shifted pose mid-scan, creating an overlapping point distribution that appears as a duplicate in the capture. This artifact introduced a performative element into what would otherwise be a static point cloud.

3D Design Gary Boodhoo, Skinjester Studio

Multiple Exposures

The subject shifted pose mid-scan, creating an overlapping point distribution that appears as a duplicate in the capture. This artifact introduced a performative element into what would otherwise be a static point cloud.

3D Design Gary Boodhoo, Skinjester Studio

Multiple Exposures

The subject shifted pose mid-scan, creating an overlapping point distribution that appears as a duplicate in the capture. This artifact introduced a performative element into what would otherwise be a static point cloud.

3D Design Gary Boodhoo, Skinjester Studio

Filling the Room

Ten screens, one voice

Filling the Room

Ten screens, one voice

Filling the Room

Ten screens, one voice

Filling the Room

Ten screens, one voice

I processed point clouds using Niagara, a visual scripting tool for creating and managing particle effects within Unreal Engine. Each point became a responsive particle, animated by sound, motion, and spatial logic. Niagara had a bit of a learning curve, but also introduced room for happy accidents. This became a way to compose across surfaces and fill the room with movement and rhythm.

Pipeline Overview:

LiDAR Capture (iPhone) → Point Cloud Processing (Meshlab, CloudCompare) → Import to Unreal Engine → Particle Animation (Niagara) → Panoramic Render (Unreal) → Cropping & Video Encoding Per Viewport (After Effects) → Synchronized Video Playback to 10-unit Projection Array (TouchDesigner)

I processed point clouds using Niagara, a visual scripting tool for creating and managing particle effects within Unreal Engine. Each point became a responsive particle, animated by sound, motion, and spatial logic. Niagara had a bit of a learning curve, but also introduced room for happy accidents. This became a way to compose across surfaces and fill the room with movement and rhythm.

Pipeline Overview:

LiDAR Capture (iPhone) → Point Cloud Processing (Meshlab, CloudCompare) → Import to Unreal Engine → Particle Animation (Niagara) → Panoramic Render (Unreal) → Cropping & Video Encoding Per Viewport (After Effects) → Synchronized Video Playback to 10-unit Projection Array (TouchDesigner)

I processed point clouds using Niagara, a visual scripting tool for creating and managing particle effects within Unreal Engine. Each point became a responsive particle, animated by sound, motion, and spatial logic. Niagara had a bit of a learning curve, but also introduced room for happy accidents. This became a way to compose across surfaces and fill the room with movement and rhythm.

Pipeline Overview:

LiDAR Capture (iPhone) → Point Cloud Processing (Meshlab, CloudCompare) → Import to Unreal Engine → Particle Animation (Niagara) → Panoramic Render (Unreal) → Cropping & Video Encoding Per Viewport (After Effects) → Synchronized Video Playback to 10-unit Projection Array (TouchDesigner)

I processed point clouds using Niagara, a visual scripting tool for creating and managing particle effects within Unreal Engine. Each point became a responsive particle, animated by sound, motion, and spatial logic. Niagara had a bit of a learning curve, but also introduced room for happy accidents. This became a way to compose across surfaces and fill the room with movement and rhythm.

Pipeline Overview:

LiDAR Capture (iPhone) → Point Cloud Processing (Meshlab, CloudCompare) → Import to Unreal Engine → Particle Animation (Niagara) → Panoramic Render (Unreal) → Cropping & Video Encoding Per Viewport (After Effects) → Synchronized Video Playback to 10-unit Projection Array (TouchDesigner)

I reconstructed the space at scale in Unreal to preview 360° projections. By reassigning the ten video feeds away from the default 1:1 projector mapping, I increased visual intensity through repetition and misalignment across the CineChamber array.

Unreal Engine Previsualization Gary Boodhoo, Skinjester Studio

I reconstructed the space at scale in Unreal to preview 360° projections. By reassigning the ten video feeds away from the default 1:1 projector mapping, I increased visual intensity through repetition and misalignment across the CineChamber array.

Unreal Engine Previsualization Gary Boodhoo, Skinjester Studio

I reconstructed the space at scale in Unreal to preview 360° projections. By reassigning the ten video feeds away from the default 1:1 projector mapping, I increased visual intensity through repetition and misalignment across the CineChamber array.

Unreal Engine Previsualization Gary Boodhoo, Skinjester Studio

I reconstructed the space at scale in Unreal to preview 360° projections. By reassigning the ten video feeds away from the default 1:1 projector mapping, I increased visual intensity through repetition and misalignment across the CineChamber array.

Unreal Engine Previsualization Gary Boodhoo, Skinjester Studio

Co-presence

Point cloud cinema

Co-presence

Point cloud cinema

Co-presence

Point cloud cinema

Co-presence

Point cloud cinema

XOHOLO demonstrated a virtuality based on projecting space outward, not inward. That shift made it possible to share the experience with others. LiDAR snapshots of familiar things became an unexpectedly rich resource. Point cloud cinema emerged from their rhythmic transformations in projected virtual space.

XOHOLO demonstrated a virtuality based on projecting space outward, not inward. That shift made it possible to share the experience with others. LiDAR snapshots of familiar things became an unexpectedly rich resource. Point cloud cinema emerged from their rhythmic transformations in projected virtual space.

XOHOLO demonstrated a virtuality based on projecting space outward, not inward. That shift made it possible to share the experience with others. LiDAR snapshots of familiar things became an unexpectedly rich resource. Point cloud cinema emerged from their rhythmic transformations in projected virtual space.

XOHOLO demonstrated a virtuality based on projecting space outward, not inward. That shift made it possible to share the experience with others. LiDAR snapshots of familiar things became an unexpectedly rich resource. Point cloud cinema emerged from their rhythmic transformations in projected virtual space.

Project rehearsal at Grand Theater

3D Design Gary Boodhoo Sound Design Yoann Resmond

Project rehearsal at Grand Theater

3D Design Gary Boodhoo Sound Design Yoann Resmond

Project rehearsal at Grand Theater

3D Design Gary Boodhoo Sound Design Yoann Resmond

Project rehearsal at Grand Theater

3D Design Gary Boodhoo Sound Design Yoann Resmond

Outcome

I reimagined point cloud scans as projection-mapped environments, integrating capture, rendering, and projection methods to evoke an immersive theater-scale experience

Methods and workflows from XOHOLO are transferable to multi-screen retail, exhibition, and experiential product environments

PartNers

Lifecycle

Residency Period April–October 2021

Key Skills & Tools

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

In-house Game Tooling, Front-end Scripting, Photoshop, Illustrator, Prototyping

Credits

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi

Creative Direction Gary Boodhoo, Yoann Resmond Production Naut Humon Technical Art Direction, 3D Design Gary Boodhoo Sound Design Yoann Resmond Projectionist Steve Pi